IDEAS

Experiential learning improves teams’ inquiry cycles: ONLINE EXCLUSIVE

By Matthew Swenson, Ann Helfman, Alix Horton and Liz Bergeron

Categories: Collaboration, Continuous improvement, Data, Improvement science/networksApril 2023

In this era of extreme partisanship, increased school and district accountability, and continuing dialogue about the ongoing impacts of the global pandemic, education leaders are under pressure to show measurable and meaningful improvement in outcomes — particularly, and rightly so, for students from marginalized groups.

While schools are under increased pressure for improvement now, the result is too often a wide-scale implementation of ideas without a clear hypothesis, success measures, or buy-in from staff. The consequence: frustration, burnout, and the perpetuation of inequities.

With an acknowledgment that all improvement requires change but not all changes are an improvement, improvement science provides a systematic approach to change guided by three improvement questions:

- What are we trying to accomplish?

- What changes might we make that will result in an improvement?

- How will we know that change is actually an improvement?

The goal of improvement science is not to simply improve outcomes but to improve outcomes and better understand the system that is producing current results. One way to better learn about the system is by testing change ideas through a plan-do-study-act (PDSA) cycle (Langley et al., 2009).

Like many inquiry models, the quality of the disciplined inquiry is paramount to the learning process, and the PDSA is no exception. Unfortunately, due to the structure and design of schools and districts and the incentives guiding them, the skills and mindsets necessary to run quality PDSAs are not inherent in many schools and districts. They must be cultivated through learning and collaborative sense-making.

Our work

The New Tech Network College Access Network is a network hub that partners with a diverse group of schools and districts in Arkansas, California, and Texas to improve college access by leveraging improvement science practices. The network has a specific, common aim that binds us: improve postsecondary enrollment rates for students of color and students who are economically disadvantaged.

At this point, you may be thinking: “I am not engaging in college access work. Should I read on?” Please do. The PDSA is flexible in that it can be used to test different types of changes across an organization.

Over the last few years, College Access Network coaches have supported schools to better understand their systems of support for students and test change ideas in practice so that school teams build confidence and capacity in identifying the most promising ideas to scale and spread. Recently, when digging into the change ideas that our school partners were testing, we noticed that many change ideas being tested were large in scale and scope, and some were drafted without a clear hypothesis, set of learning questions, predictions, and measures.

Many school teams were being thoughtful in selecting change ideas, but they were not slowing down to consider what they wanted to learn from their test. This was likely caused by a variety of factors: the ongoing effects of the global pandemic, a long list of competing priorities within schools, and, most importantly, the lack of the specific skills needed to operate quality PDSAs. It was time to innovate.

As an organization, we believe in the power of experience and collaborative sense-making. We did not want to simply tell participants about the PDSA, but rather to design experiential learning where participants could see the power of the PDSA firsthand. During our most recent site school visit, we tested a learning experience for school teams to increase the quality of the PDSA cycles. We coined this test a “live PDSA.”

The live PDSA activity included running a full PDSA cycle in about two hours. We hypothesized that if we supported school teams through an entire cycle, they would feel better equipped to run small-scale, quality PDSA cycles more regularly.

Before launching the live PDSA activity, we asked teams to take an assessment to capture their perceived confidence in key testing skills: articulating a hypothesis, developing learning questions and a data collection plan, reflecting on what they learned, and identifying next steps.

During the test, we focused on a change idea for increasing the percentage of students who apply to three or more postsecondary institutions, but teams could substitute any improvement aim in its place.

The live PDSA

During a typical site visit, we gather a team of four to eight educators for collaborative learning. High school teams often include one or more counselors, administrators, and teachers, and they have the support of an identified district sponsor.

Here are examples of how the live PDSA activity progressed through the four phases of the cycle.

Plan

During the plan phase, we provided teams with a few potential change ideas and the option to adjust or create something new. Teams then had time to plan their test (find the template we use here: bit.ly/3ipCJKa).

They considered: What will we try? What is our hypothesis (if x, then y)? What do we want to learn from this test? What do we think will happen? What data could help us reflect on these predictions later? The coach observed and found an appropriate time to provide feedback or ask a probing question.

One school’s live PDSA journey: For our live PDSA activity, we provided school teams with postsecondary application change ideas from our network’s change package. One team decided to test a check-in protocol to gauge seniors’ progress on applying to three or more postsecondary institutions.

They spent their planning time drafting interview questions and, to ensure they had data for the study conversation, calibrating on their note-taking strategy. They took notes in a shared Google Doc. After the team drafted the plan section of their PDSA, their coach provided feedback. The team drafted the following before launching the test:

- Theory: If we ask seniors questions about their postsecondary plans, then they will gain awareness of their progress and be able to articulate a postsecondary plan and next steps.

- Learning questions:

- How many students have applied to three or more postsecondary institutions?

- How many students will articulate their postsecondary plans?

- What will students identify as their greatest early wins and barriers in the postsecondary application process?

- How many students will indicate that this conversation was helpful or ask for a follow-up one-on-one conversation?

- The school team made predictions connected to each of the learning questions.

Do

During the do phase, team members worked with seniors directly to test the idea. In preparation for the site visit, we asked school teams to invite seniors at a specific time during the day. For our test, each team member tested the idea.

One school’s live PDSA journey: During the testing phase, team members led a conversation with one or two seniors using the shared interview protocol. Team members took notes during the conversation to bring back to the study conversation. During this time, the coach observed.

Study

From our experience, the study phase is the part schools often skip. Slowing down to study takes time and energy but can also lead to powerful learning and efficacy that can support long-term improvement.

During the study phase of the live PDSA, we asked teams to share their experiences and discuss what they learned from the test by comparing their results to their learning questions, predictions, and measures. The coach provided feedback after the team’s conversation.

It is this part of the process where each team member’s (or even the team’s collective) mental model is tested by what actually happened. During the plan phase, the team had certain assumptions that might now be challenged by reality. We have found these moments lead to effective conversations and professional growth.

One school’s live PDSA journey: After the senior interviewees exited, the team studied the results of the test. Each team member shared their notes, then the group discussed what they heard compared to what they predicted they would hear from students.

Team members were surprised to find that, while they predicted that all students would be able to articulate a postsecondary plan, some could not and noted that they were struggling with selecting which postsecondary institutions to apply to. All students noted that the conversation was helpful, and most students asked for a follow-up.

Act

Finally, teams were asked to act, considering what they want to do next. Should they:

- Expand the idea to learn more?

- Adapt the idea or bundle it with another change?

- Adopt the idea?

- Abandon the idea?

The important element during this phase is for team members to consider how confident they are in the effectiveness of a given idea before implementation. Team members can use additional PDSA tests to build confidence as they spread the idea and test under a variety of contexts. In most cases, learning beyond one PDSA cycle is necessary.

One school’s live PDSA journey: The team decided to expand the idea with a specific focus on tightening the interview protocol, with the eventual goal of possibly spreading the idea. In particular, team members wanted to ensure the check-in protocol and process were tight before spreading schoolwide.

Our learning

“We don’t need to launch an idea to all students all at once.”

“We can run a full cycle of learning in under two hours — that is amazing!”

“We can plan a PDSA in 15 minutes and study in 10 minutes — it can be quick.”

“Roles matter in planning the PDSA. We need to make sure all voices are heard.”

“The predictions can lead to transformational conversations that are not possible without PDSA cycles.”

“The idea we tested could work with more students, but I want to try […].”

Plan. Do. Study. Act. Done with care, this iterative improvement cycle ''...can lead to transformational conversations that are not possible without PDSA cycles.'' #TheLearningPro Share on X

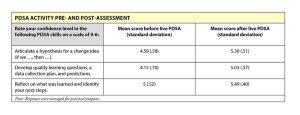

The statements above are reflections collected from the 32 school teams in the post live PDSA activity surveys and debriefs. Twenty-nine school teams responded to the same pre- and post-assessments determining their confidence in key testing skills: articulating a hypothesis, developing learning questions and a data collection plan, reflecting on what was learned, and identifying next steps. In nearly all cases, teams felt more confident in running quality PDSA cycles in the future. See the results in the table “PDSA activity pre- and post-assessment.”

Throughout the testing of the live PDSA, our thinking was solidified: the quality, not simply the quantity, of PDSAs matters, and education professionals need time and space to practice the skills necessary for quality testing. A live PDSA — a two-hour investment — allows time for school teams to practice a full testing cycle that includes a clear hypothesis, learning questions, predictions, and measures, which enables a team to analyze what they thought would happen compared to what actually happened.

The moments when prediction and reality diverge are often the moments when teams can explore what elements of the system are producing the outcomes, for whom, and when. In this exploration, educators can meet the goal of improving outcomes while better understanding the system, challenge their mental models, and hopefully build a future where every student can thrive.

Download pdf here.

References

Langley, G.J., Moen, R.D., Nolan, K.M., Nolan, T.W., Norman, C.L., & Provost, L.P. (2009). The improvement guide: A practical approach to enhancing organizational performance. Jossey-Bass.

Categories: Collaboration, Continuous improvement, Data, Improvement science/networks

Recent Issues

WHERE TECHNOLOGY CAN TAKE US

April 2024

Technology is both a topic and a tool for professional learning. This...

EVALUATING PROFESSIONAL LEARNING

February 2024

How do you know your professional learning is working? This issue digs...

TAKING THE NEXT STEP

December 2023

Professional learning can open up new roles and challenges and help...

REACHING ALL LEARNERS

October 2023

Both special education and general education teachers need support to help...