IDEAS

Program evaluation and design go hand-in-hand in Tennessee

By Joe Anistranski, Karen Harper and Stephanie Zeiger

Categories: Data, Evaluation & impact, Improvement science/networks, TechnologyFebruary 2024

Read the remaining content with membership access. Join or log in below to continue.

Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Nemo enim ipsam voluptatem quia voluptas sit aspernatur aut odit aut fugit, sed quia consequuntur magni dolores eos qui ratione voluptatem sequi nesciunt. Neque porro quisquam est, qui dolorem ipsum quia dolor sit amet, consectetur, adipisci velit, sed quia non numquam eius modi tempora incidunt ut labore et dolore magnam aliquam quaerat voluptatem.

References

Guskey, T.R. (2000). Evaluating professional development. Corwin.

Kirkpatrick, D.L. (1959). Techniques for evaluation training programs. Journal of the American Society of Training Directors, 13, 21-26.

National Assessment of Educational Progress. (2022). Tennessee demographics (2021-22). U.S. Department of Education, Institute of Education Sciences. www.nationsreportcard.gov/profiles/stateprofile/overview/TN

National Center for Education Statistics. (2022). Digest of education statistics. nces.ed.gov/programs/digest/

Nordengren, C. & Guskey, T.R. (2020). Chart a clear course: Evaluation is key to building better, more relevant learning. The Learning Professional, 41(5), 46-50.

Categories: Data, Evaluation & impact, Improvement science/networks, Technology

Recent Issues

WHERE TECHNOLOGY CAN TAKE US

April 2024

Technology is both a topic and a tool for professional learning. This...

EVALUATING PROFESSIONAL LEARNING

February 2024

How do you know your professional learning is working? This issue digs...

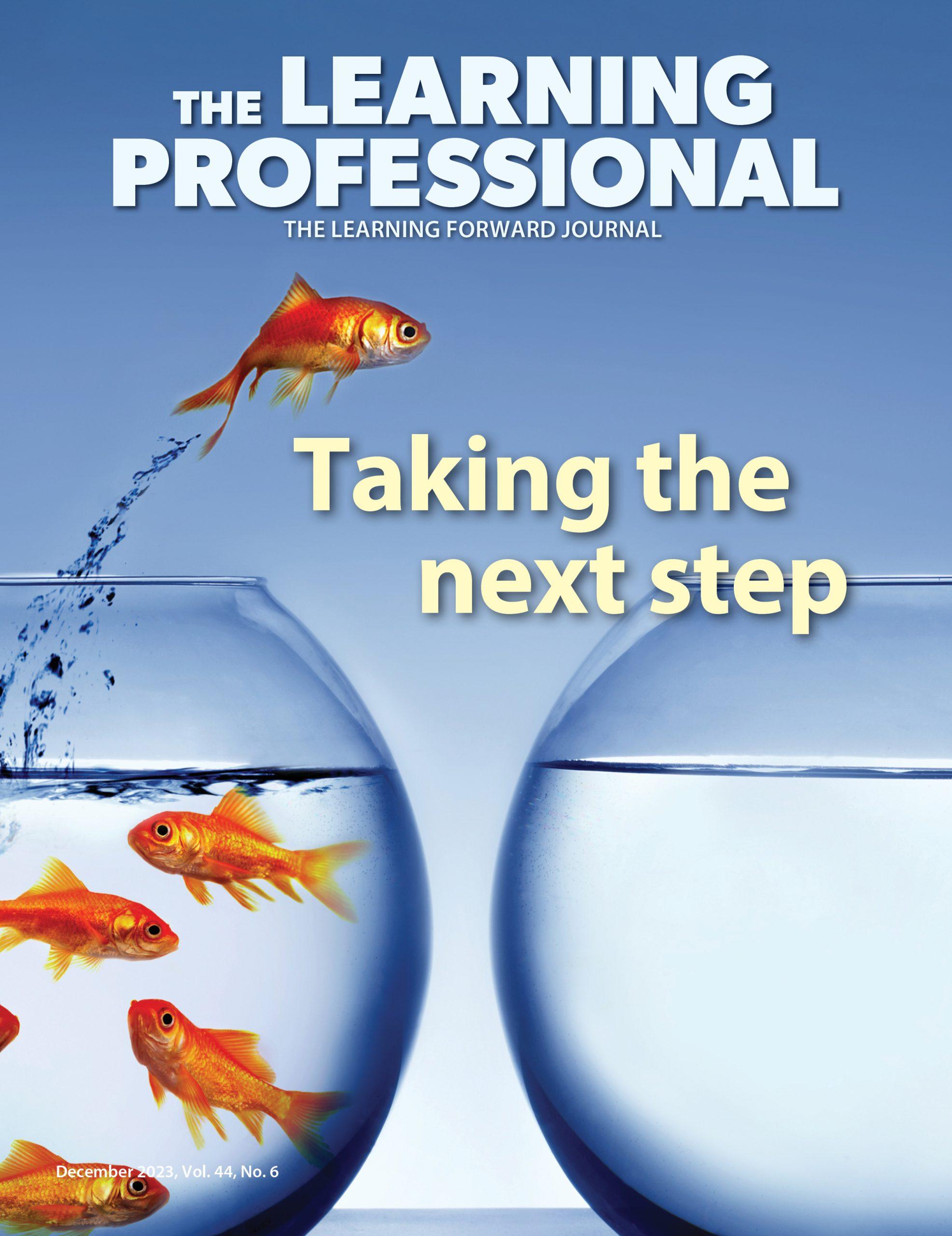

TAKING THE NEXT STEP

December 2023

Professional learning can open up new roles and challenges and help...

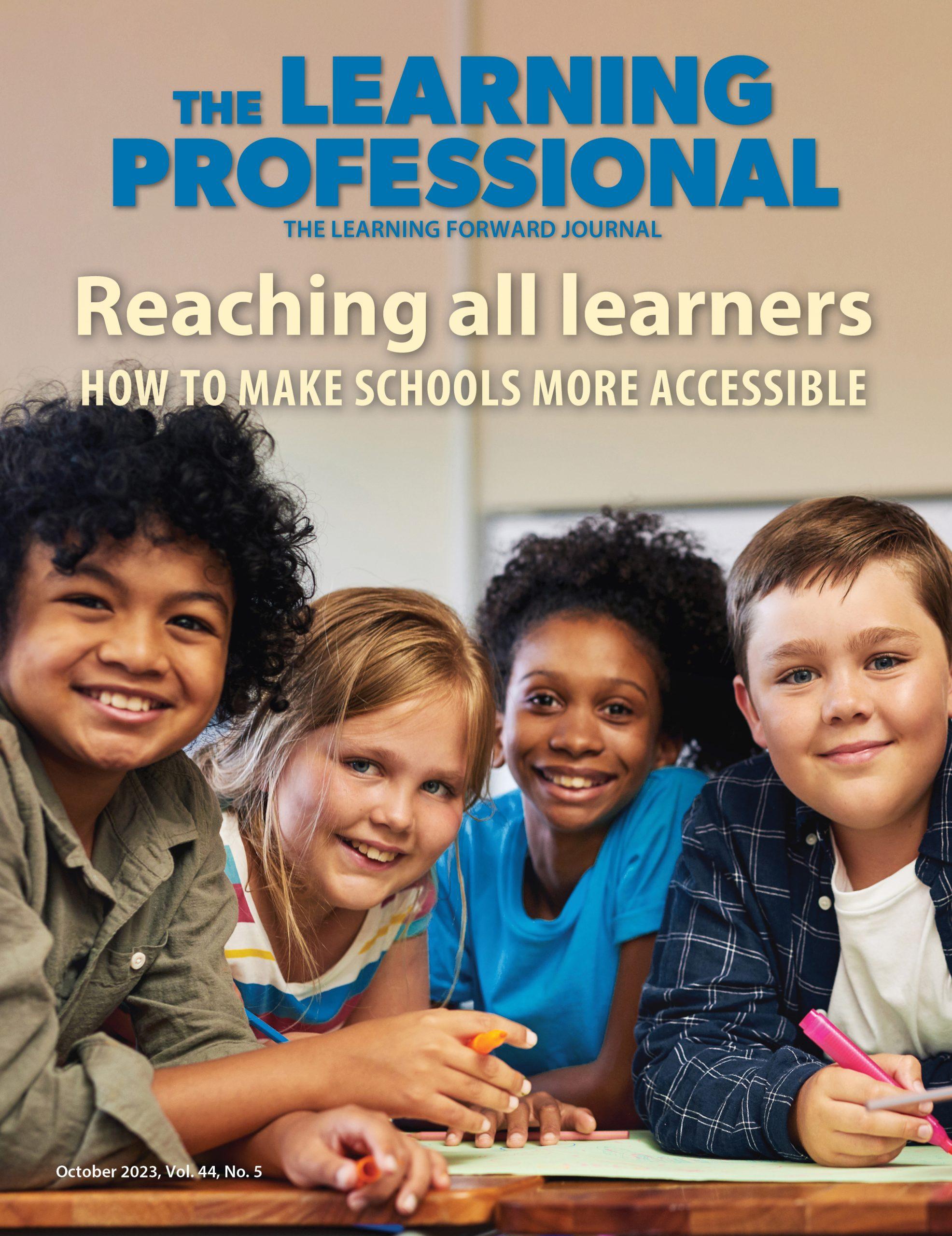

REACHING ALL LEARNERS

October 2023

Both special education and general education teachers need support to help...